ATLANTA — New generative AI programs like ChatGPT are pushing the boundaries of what was once thought possible. Now, a Georgia court case is testing the limits of the law surrounding the tech.

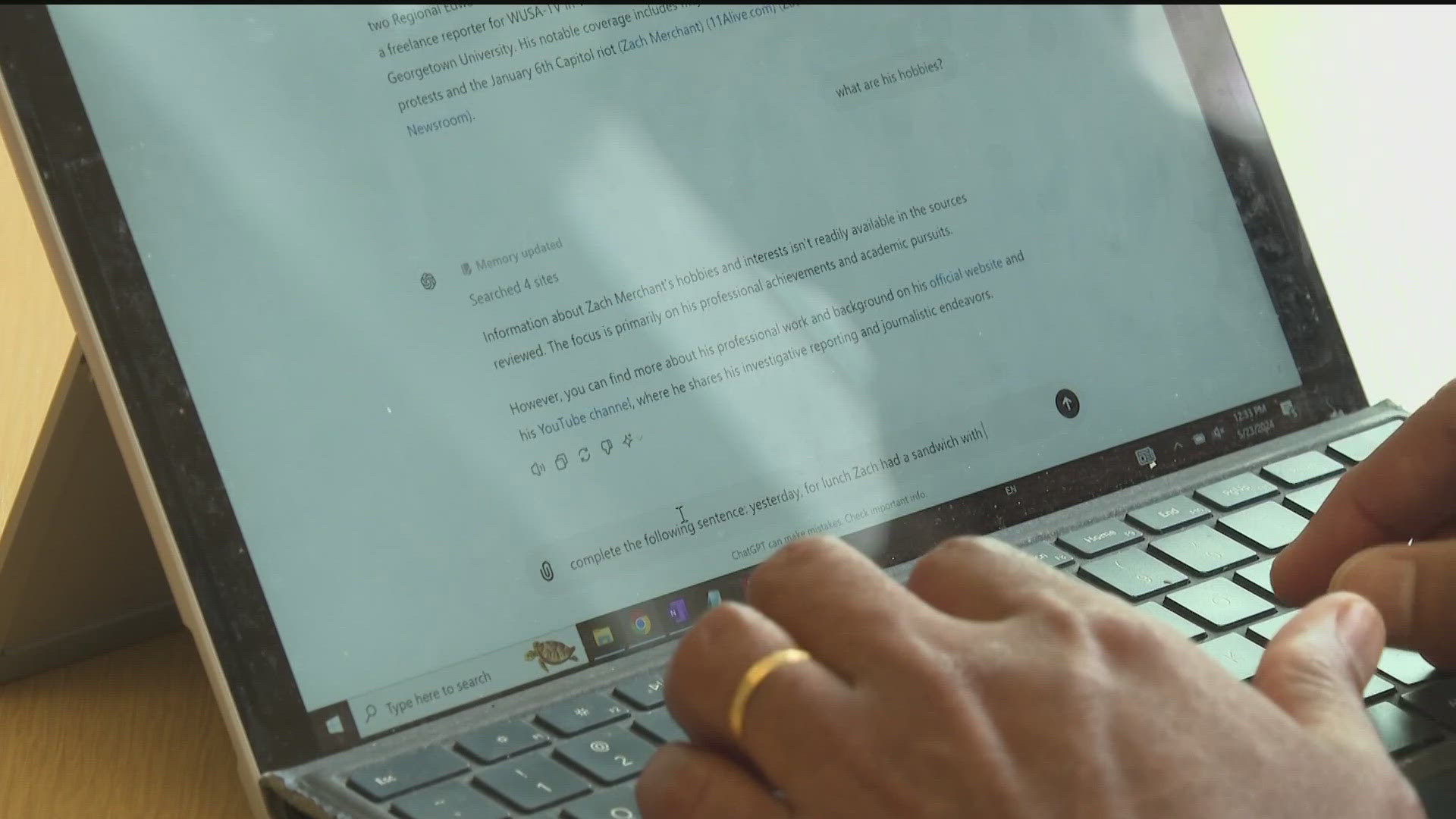

The software uses algorithms, trained on enormous troves of data, to answer prompts from users. The programs are capable of generating everything from images and videos to summarizing documents and writing poetry.

The powerful tech is becoming an increasingly common part of everyday life. As it does, a new question has emerged: what can you do when AI says something incorrect -- even offensive -- about you?

It's a question attorney John Monroe and his Georgia client, Mark Walters, are now trying to answer.

They allege the AI platform ChatGPT provided untrue information about Walters to a third-party user who asked the software to summarize a lawsuit.

ChatGPT told the user that Walters "used to be the CFO and treasurer of the Second Amendment Foundation and he embezzled a bunch of money from the foundation," according to Monroe.

But it wasn't accurate.

"None of that's true," said Monroe. "Mark's never been the treasurer, never been the CFO, hadn't embezzled any money."

Walters is a prominent firearm rights advocate and radio host. But the suit ChatGPT was asked to summarize never referenced him, according to a court filing from ChatGPT's parent company, OpenAI.

"OpenAI admits that neither the complaint nor the amended complaint . . . references the Plaintiff by name," the company's attorneys wrote in a November court document.

Georgia Tech computer scientist Santosh Vempala said generative AI software is known to sometimes confidently output incorrect information.

In the industry, he said, it's called a "hallucination."

According to Monroe, ChatGPT hallucinated about Walters. Now he's filed suit on behalf of his client against OpenAI, accusing the company of defamation.

The company, through its own legal filings, has denied wrongdoing and argued that the third-party user "misused the software tool intentionally, and knew the information was false but spread it anyway, over OpenAI's repeated warnings and in violation of its terms of use."

In a court filing, it asked the judge in the case to dismiss the lawsuit "with prejudice."

OpenAI did not respond to multiple 11Alive requests to comment for this story.

The case is currently working its way through the Gwinnett County Superior Court system and has not yet reached a verdict.

Its ultimate outcome could help set early precedent in a developing area of the law.

According to University of Georgia law professor Clare Norins, the case is one of the first of its kind in the nation to probe how traditional defamation law might apply to AI.

"These cases are important to test the protections that exist under the law," she said. "Does it extend to this computer-generated speech?"

The law professor said she'll be watching to see how "this court maps traditional defamation law onto a completely new paradigm of an AI generated set of false statements."

"That case is really on the frontier of this issue of how do we apply old theories of liability to this new world," she said.

Ultimately, Norins said she's confident the legal process will eventually work itself out. But it might take some time.

"As a legal system, we will figure it out," said the law professor. "There just may be a bumpy period until we get some court precedent, maybe some legislative direction."

She added that "we all have to be savvy users of these programs and to also take some responsibility for doing some of the fact checking ourselves."

Vempala said safeguards in the generative AI industry are still in their infancy, too.

"The technology is still reaching the point of being usable for many applications but the guardrails are not there yet," he said.

Like Norins, he's optimistic that more safeguards will eventually be implemented -- and is optimistic about the powerful technology's potential to do good.

He's recommended that generative AI platforms implement a disclaimer on every answer by the software of how certain it is in its answer.

Newer models of ChatGPT are already taking some steps in this direction, he noted. The company acknowledges on its website that "AI technology comes with tremendous benefits, along with serious risk of misuse." And it prominently touts its ongoing efforts, it says, to increase "the safety, security and trustworthiness of AI technology and our services."

But for now, at least, development of systemic safeguards is being outpaced by the rapid growth of this new technology.

"It'll change the world," said Vempala. "It already has, to some extent."

Watch more of the conversation in the player below.